-

/*-------------------------------

-------------------------------

17th century

-------------------------------

-------------------------------*/

- 17th Century

/*-------------------------------

-------------------------------

John Graunt

-------------------------------

-------------------------------*/

-

John Graunt

1620 — 1674

Studied records of deaths in London in the early 1600s; produced a single paper. The weekly bills of mortality, which had been collected since 1603, were designed to detect the outbreak of plague. Graunt put the data into tables and produced a commentary on them; he does basic calculations. He discusses the reliability of the data. He compares the number of male and female births and deaths. In the course of estimating the population of London, Graunt produces a primitive life table. The life table became one of the main tools of demography and insurance mathematics.

/*-------------------------------

-------------------------------

PASCAL AND FERMAT

-------------------------------

-------------------------------*/

-

Blaise Pascal and Pierre de Fermat

1623 — 1662 and 1601 — 1655

The origins of probability are usually found in the correspondence between Pascal and Fermat where they treated several problems associated with games of chance. The letters were not published but their contents were known in Parisian scientific circles. Pascal’s only probability publication was posthumously published; this treated Pascal’s triangle with probability applications. Pascal introduced the concept of expectation and discussed the problem of gambler’s ruin.

/*-------------------------------

-------------------------------

HUYGENS

-------------------------------

-------------------------------*/

-

Christiaan Huygens

1629 — 16945

Huygens wrote the first book on probability (a pamphlet really), translated into English as "The Value of all Chances in Games of Fortune etc." Huygens drew on the ideas of Pascal and Fermat, which he had encountered when he visited Paris. Much of the book is devoted to calculating the expectation of a game of chance. The problems contained in the book include the gambler’s ruin and Huygens' treatment of the hypergeometric distribution.

/*-------------------------------

-------------------------------

18th century

-------------------------------

-------------------------------*/

- 18th Century

/*-------------------------------

-------------------------------

BERNOULLI

-------------------------------

-------------------------------*/

-

Jakob (James) Bernoulli

1654 — 1705

His only probability publication was extremely influential. Bernoulli's work was an important contribution to combinatorics: the term permutation originated here. Bernoulli used the terms "a priori" and "a posteriori" to distinguish two ways of deriving probabilities (see posterior probability): deduction a priori (without experience) is possible when there are specially constructed devices, like dice but otherwise it is possible to make a deduction from many observed outcomes of similar events. Bernoulli’s theorem, or the Law of Large Numbers was the work’s most spectacular contribution. We also get the binomial distribution from this work. The eponymous Bernoulli trials, numbers and random variable all refer to this Bernoulli and his publication.

/*-------------------------------

-------------------------------

de Moivre

-------------------------------

-------------------------------*/

-

Abraham de Moivre

1667 — 1754

De Moivre obtained the normal approximation to the binomial distribution (a forerunner of the Central Limit Theorem) and almost found the Poisson distribution. De Moivre also wrote about life insurance mathematics when analyzing annuities.

/*-------------------------------

-------------------------------

BAYES

-------------------------------

-------------------------------*/

-

Thomas Bayes

1702 — 1761

Bayes's Formula, Rule and Theorem are all today associated with a basic theorem on conditional probability.

/*-------------------------------

-------------------------------

LAPLACE

-------------------------------

-------------------------------*/

-

Pierre-Simon Laplace

1749 — 1827

Laplace wrote on probability over a period of more than 50 years. Laplace made many contributions, producing results like the Central Limit Theorem (the result of an almost forty years’ effort). In addition, interval statements about the parameter of the Binomial distribution — ancestors of the modern confidence interval — were produced by Lagrange and Laplace in the 1780s.

/*-------------------------------

-------------------------------

GAUSS

-------------------------------

-------------------------------*/

-

Carl Friedrich Gauss

1777-1855

Gauss is generally regarded as one of the greatest mathematicians of all time and his contributions to the theory of errors were only a small part of his total output. In 1805 Legendre had published the method of least squares, but Laplace and Gauss fastened the concept to the theory of probability by 1825.

/*-------------------------------

-------------------------------

19th century

-------------------------------

-------------------------------*/

- 19th Century

/*-------------------------------

-------------------------------

QUETELET

-------------------------------

-------------------------------*/

-

Lambert Quetelet

1796-1874

Used descriptive statistics to analyze crime and mortality data and studied census techniques. Described normal distributions in connection with human traits such as height.

/*-------------------------------

-------------------------------

GALTON

-------------------------------

-------------------------------*/

-

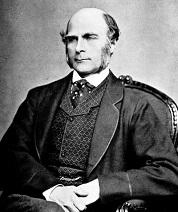

Francis Galton

1822-1911

The founding father of biometry. Cousin of and statistical advisor to Charles Darwin. The normal distribution played an important part in Galton’s work. He is most remembered for introducing the methods of correlation and regression. Galton used regression and correlation to study genetic variation in humans. His statistical methods were used by psycologists in Britain. In addition, he contributed a large number of terms to statistics, including the terms ogive, percentile and interquartile range.

/*-------------------------------

-------------------------------

P. L. Chebyshev

-------------------------------

-------------------------------*/

-

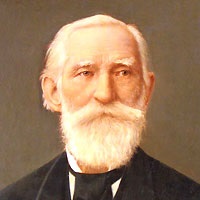

P. L. Chebyshev

1749 — 1827

Notable Russian mathematician who gave us his eponymous inequality. Chebyshev’s inequality provides a way to estimate what fraction of data falls within K standard deviations from the mean for any data set. Chebychev also contributed to firming up the proof of the Law of Large Numbers for a more generalized form. He used a different method of proof to prove the Central Limit Theorem. Among his students were Markov and Lyapunov.

/*-------------------------------

-------------------------------

20th century

-------------------------------

-------------------------------*/

- 20th Century

/*-------------------------------

-------------------------------

PEARSON

-------------------------------

-------------------------------*/

-

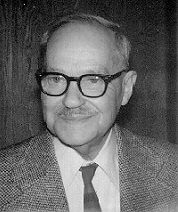

Karl Pearson

1857-1936

Protégé and biographer of Francis Galton. In 1901, Pearson, Galton and zoologist Walter Weldon published and established Biometrika, a peer-reviewed scientific journal to promote the study of biometrics. Pearson’s contribution consisted of new techniques and eventually a new theory of statistics based on the Pearson curves, correlation, the method of moments and the chi-square test. Pearson was eager that his statistical approach be adopted in other fields. He created a very powerful school and for decades his department was the only place to learn statistics. Many distinguished statisticians attended Pearson's lectures or started their careers working for him. Pearson had a great influence on the language and notation of statistics. He coined the terms normal (distribution), histogram and standard deviation. Pearson also established the population-sample terminology used in theoretical statistics.

/*-------------------------------

-------------------------------

GOSSET

-------------------------------

-------------------------------*/

-

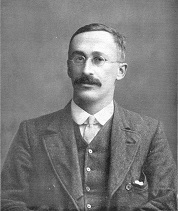

William Sealy Gosset

1876-1937

Chemist, brewer and statistician. Gosset was an Oxford-educated chemist who worked for Guinness, the Dublin brewer. Gosset taught himself the theory of errors from the textbooks. His career as a publishing statistician began after he studied for a year with Karl Pearson. In his first published paper ‘Student’ (as he called himself) rediscovered the Poisson distribution. In 1908, he published two papers on small sample distributions, one on the Student's t distribution which corrected problems connected with small sample sizes and one on normal correlation. Although Gosset’s fame rests on his t distribution, he wrote on other topics. His work for Guinness and the farms that supplied it led to work on agricultural experiments. Gosset was a marginal figure until Fisher built on his small-sample work and transformed him into a major figure in 20th century statistics.

/*-------------------------------

-------------------------------

SPEARMAN

-------------------------------

-------------------------------*/

-

Charles Spearman

1863-1945

English psychologist known for work in statistics, as a pioneer of factor analysis, and for Spearman's rank correlation coefficient. The first to develop intellegence testing using factor analysis.

/*-------------------------------

-------------------------------

FISHER

-------------------------------

-------------------------------*/

-

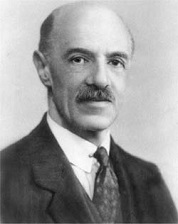

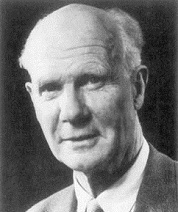

R. A. Fisher

1890-1962

Statistician, evolutionary biologist, mathematician, and geneticist. Fisher's important contributions to statistics include the analysis of variance (ANOVA), maximum likelihood, fiducial inference, and the derivation of various sampling distributions. Fisher created many terms in everyday use, including "statistic" and "sampling distribution." Fisher influenced statisticians like Bose, mainly through his writing.

/*-------------------------------

-------------------------------

E.Pearson and Neyman

-------------------------------

-------------------------------*/

-

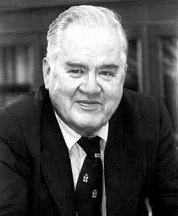

Jerzy Neyman and Egon Pearson

1894 - 1981 and 1895-1980

Jerzy Neyman and Karl Pearson’s son Egon Pearson developed an approach to hypothesis testing, which became the standard classical approach. Their work presented a general theory of hypothesis testing, featuring such characteristic concepts as size, power, Type I error and critical region. The theory of confidence intervals was given to us by Neyman.

/*-------------------------------

-------------------------------

WILCOXON

-------------------------------

-------------------------------*/

-

Frank Wilcoxon

1892-1965

Chemist and statistician. Over his career Wilcoxon published over 70 papers. His most well-known paper contained the two new statistical tests that still bear his name, the Wilcoxon rank-sum test and the Wilcoxon signed-rank test. These are non-parametric alternatives to the unpaired and paired Student's t-tests respectively.

/*-------------------------------

-------------------------------

TUKEY

-------------------------------

-------------------------------*/

-

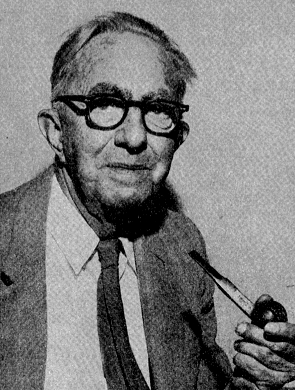

John Tukey

1915 - 2000

Mathematician. Worked at Princeton during World War II. Introduced exploratory data analysis techniques such as the boxplot and stem and leaf plot. Tukey also worked at Bell Laboratories and is best known for his work in inferential statistics and the Fast Fourier Transform Algorithm (FFT). Coined the computer term "bit."

/*-------------------------------

-------------------------------

KENDALL

-------------------------------

-------------------------------*/

-

David Kendall

1918-2007

Mathematician and statistician. Worked at Princeton and Cambridge. Was a leading authority on applied probability and data analysis.

SOURCE: Figures from the History of Probability and Statistics by John Aldrich.

SOURCE: Earliest Known Uses of Some of the Words of Mathematics by Jeff Miller, et.al.